General information

How to be authorized to access to the computers at CINES

Every researcher of the national community can, under authorization of his laboratory, ask for resources on computers at CINES. there’s an application for that : «DARI » (Demande d’Attribution de Ressources Informatiques). The file must be submitted during one (or the two) twice yearly sessions (April and September).

Throughout the year, the users who have used all theirs HPCs hours can ask for supplementary requests onthe web site DARI.

Such request shall be justified, in an objective and reasoned manner, a mail shall be send at

This request be reviewed by the committees responsible.

The charter of the CINES say that the logins are strictly personal and not transferrable. Any failure to this rule could result of the revoking og the account and the right to access to the resources at CINES. The charter can be read here.

Data management

All the informations can be found here.

Here is a summary, there’s 4 data spaces :

- /home : for compilation, to keep your programs + libs used to run your progs

- /scratch : TEMPORARY space, you can submit your jobs from this space, you can use it too for th temporary I/O during the run

- /store : space more secure. To keep your important output.

It’s important to note that the /scratch is a temporary space. You MUST use the /store.

You can use the command etat_projet which gives, among other things, the state of consumption of the project but also the occupation and limits of the various storage spaces.

You must be careful about the exhaustive and recursive natureof this tool because depending on the directory in which you use it, it can take a considerably long time.

You can try to target the branches of the tree structure that interest you the most to avoid scanning your entire space. It is possible to save the results of a search in a file so that they can be processed later without having to restart the command.

The informations about the quotas maximum are available here.

Softwares

All the software installed by the CINES can be use throught a “module” command. You can use module avail to know the listing of all the modules availables on the machine. module avail

the command module can be call througth different mean :

module availmodule avail listing of all the modules installed on the machine (for ex. abinit).module load nom_modulemodule loadmodule load abinitmodule show nom_modulegive the informations about a module (version, conflict with others modules, etc..).module listlist of all the loaded modules for the current session (only the current session).

One time, the command modulemodule load nom_module call, you can use the software.

Multiple software versions are available ont the machines. You can list all the differents version with the command : module avail

If you can’t find the version you look for in the listing, you can call the support team (svp@cines.fr) who could help you to make your progs run with the current versions, or to install a newer version.

If it’s a software under license, first you must gave us the license, than, we will install the software.

If the software is specific to your ressearch community, we will encourage you, to install it in your environment (in your $SHAREDDIR for example).

Otherwise, you could make a call to the support team at CINES (svp@cines.fr) to ask for a new installation. Your recipe will be carefully studied by the support team, and an answer will be transmit as soon as possible.

Machines availability

You could have a look of the machines availability on on this web page.

Occigen

General

The supercomputer Occigen encompasses :

- 2106 dual-socket nodesIntel® Xeon® Haswell @ 2,6 Ghz. Twelve cores are present on each socket.

- 1260 dual-socket nodesIntel® Xeon® Broadwell @ 2.6 GHz. Fourteen cores are present on each socket.

The AVX2 vectorisation technology is available. Please, note that hyperthreading is activated on each node.

The operating system is built by BULL, and named BullX SCS AE5.

the /scratch is a Lustre Filesystem for the HPC applications.

Your /home directory is installed on a Pananas file system.

The /store is a Lustre Filesystem, it’s the backed-up storage space.

$HOMEDIR (panasas file system) is mainly dedicated to your source files and $STOREDIR to permanent data files (input and results files). At runtime, your input and output files should be placed on $SCRATCHDIR.

Exemple d’utilisation :

cd $SCRATCHDIR

Finally, if you want to share files with other logins of your unix group, the $SHAREDHOMEDIR and $SHAREDSCRATCHDIR are there for you.

Espace /home, les quotas sont appliqués par utilisateurs (volume). Vous pouvez consulter le fichier suivant :

cat $HOMEDIR/.home_quota

Espace /scratch, les quotas s’appliquent aux groupes unix (volume).

Please use the commandetat_projet

Access to Occigen

Linux : type the usual command : ssh login_name@occigen.cines.fr

Windows : through a ssh client (ex : putty) choose occigen.cines.fr

It is necessary to redirect the graphic output to a X server.

It is necessary to redirect the graphic output to a X server. In order to do that under Linux, please connect with the command ssh -X login_name@occigen.cines.fr.

Under Windows, use Xming for example and activate the X11 forwarding from the SSH client menu. // > > /

Under Linux, the copy must be done with the scp command from your machine to occigen.cines.fr.

Under windows ; please use a transfer files software as Filezilla and specify occigen.cines.fr and port 22.

Submitting jobs

The job scheduler is SLURM (Simple Linux Utility for Resource Management). This software came from ShedMD. The version on Occigen, was updated by Bull/ATOS.

First, make a script with the sbatch commands, an example is given below. The first line of this file must always be a line giving the shell of the commands that will be used: # !/bin/bash. The lines beginning with an hyphen and containing the word SBATCH will be taken into account by the scheduler SLURM. <>><

They give instructions related to the reservation of resources you actually need and wall clock time limit.

Exemple de contenu d’un script SLURM pour un programme MPI s’exécutant sur 2 noeuds Broadwell et 56 coeurs

#!/bin/bash #SBATCH -J job_name #SBATCH --nodes=2 #SBATCH --constraint=BDW28 #SBATCH --ntasks=56 #SBATCH --ntasks-per-node=28 #SBATCH --threads-per-core=1 #SBATCH --mem=30GB #SBATCH --time=00:30:00 #SBATCH --output job_name.output module purge module load intel module load openmpi # n'hesitez pas a tester plusieurs versions srun --mpi=pmi2 -K1 -n $SLURM_NTASKS ./mon_executable param1 param2 …

The interactive mode is forbidden on a login node.

On a compute node, first, you should type the following command (on the login node) to get access to some resources (compute nodes):

then when the resources become available, in the same windows, type :

salloc --constraint=BDW28 -N 1 -n 10 -t 10:00 srun mon_executable

If your executable is able to do checkpoint/restart files, please respect the fair play rules and submit a new job for continuation.>

If your application does not make a checkpoint/restart yet, please send a well argued demand to svp@cines.fr and it will be examined. Even if you are granted of such an authorization, you should you keep in mind that the resources that can host this queueing class are limited and the priority of these kind of jobs are lower. Your job is also largely exposed to the risk of node failure and a general advice is that you have to develop checkpointing in your code in order to avoid the waste of computational time in such a case.

Half of the 2106 nodes Haswell of the fist part of Occigen benefits from 128 GB of memory.

In order to tell the SLURM scheduler that your job actually need them, add the following line in your script :

#SBATCH --mem=118000

#SBATCH --constraint=HSW24

You can find on this web page,some examples of script using MPI and OpenMP (MPI+OpenMP (Hybrid)

We have a tool on Occigen that you can use to run sequential taks inside a MPI job. hese tasks are commands lines, with one line equal to one executable and his parameters.

The tool’s name is pserie_lb. You can use it by calling the module pserie/0.1.

The documentation on pserie_lb can be read here in chapter 4.

Les fichiers dans le $SCRATCHDIR peuvent être « étaler » de façon à paralléliser les accés.

Il suffit d’utiliser les commandes de striping que l’on trouve sur cette page, ou sur la page de la documentation Lustre

Please add the following lines at the beginning of the slurm script :

#!/bin/bash . /usr/share/Modules/init/bash

Careful do not forget the dot (“.”) at the start of the second line.

Add to the file ~/.emacs

;;disable the version control (setq vc-handled-backends nil)

This command facilitates the listing of your directory (on lustre file systems only /store and /scratch) :

/usr/local/bin/lfs_ls

This command does’nt work well with /home

There’s a technical note here. It can be download here.

VASP offers both parallelization :

- over bands (see NPAR)

- and over k-points (see KPAR).

In your input file (INCAR) these parameters must be carefully chosen in order to obtain the best performance, taking into account of the Occigen architecture.

Parameters of the INCAR file

The KPAR value

Que représente ce paramètre?

The KPAR parameter manages the parallelization over the k-points,

it represents the number of kpoints groups created to parallelize on this dimension. The Occigen supercomputer has double-socket nodes with 12 cores per socket. Keep in mind that one should try to exploit the highest number of cores available on each node while complying with the constraints described below.

For example, if the study case owns 10 k-points and KPAR is set to 2, there will be 2 k-points groups each performing calculations on 5 k-points. Similarly, if KPAR is set to 5, there will be 5 groups each with 2 k-points.

Currently KPAR is limited to values that divide exactly both the total number of k-points and the total number of cores used by the job.

The NPAR value

Que représente ce paramètre ?

The NPAR parameter manages the parallelization over the bands,

It determines the number of bands that are handled in parallel, each band being distributed over some cores. The number of cores that are dedicated to a band is equal to the ratio : total number of cores divided by NPAR. If NPAR=1, all the cores will work together on every individual band.

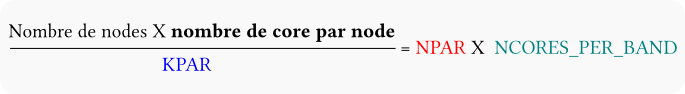

NPAR must be adjusted in agreement with the KPAR value according to the following formula:

The best performance seems to be obtained on Occigen for NCORES_PAR_BAND ranging from 2 to 4.

Selecting the right number of cores for a calculation with VASP is very important because using too many cores will be inefficient. As a rule of thumb, you can choose the number of cores in the same order of magnitude as the number of atoms. This parameter needs further refinements. When the workload is split over several compute nodes, you have to ensure that a sufficient amount of work has been allocated to each core.

If you encounter « out Of Memory » problems, the following action should be taken :

- Select the high memory nodes by adding a special directive in your job script ( #SBATCH – mem=120GB)

- If this is not sufficient, consider again the parallelisation. Try to increase the number of cores and/or unpopulate the cores.

Following is an example of a submission script for Occigen

#!/bin/sh #SBATCH --nodes=2 #SBATCH --constraint=HSW24 #SBATCH --ntasks-per-node=24 #SBATCH --ntasks=48 #SBATCH --mem=118000 # si les nœuds par défaut à 64 GB ne sont pas suffisants #SBATCH --time=24:00:00 #SBATCH --exclusive #SBATCH --output vasp5.3.3.out module purge module load intel module load openmpi ulimit -s unlimited srun --mpi=pmi2 -K1 /path/vasp-k-points

Visualization

General

The vizuualization cluster has a login node (visu.cines.fr) and 4 nodes for visualization and pre/post processing (from visu1 to visu4).

Somme processor like Broadwell, bi-sockets nodes with 14 cores per socket, are available with 256Gb of memory by node. A full description can be can be found here.

Each node has a 1 GPUs Nvidia Tesla P100 with 12 Gb each.

Hyperthreading is activated on these processors.

Access to vizualisation

To have access to the vizulisation cluster you can connect with a ssh comand to the address visu.cines.fr Windows : through a ssh client (ex : putty) choose visu.cines.fr

Sous Linux, depuis votre laboratoire vers l’adresse visu.cines.fr avec le protocole scp.

Sous Windows, utilisez un logiciel de transfert comme Filezilla en lui donnant l’hôte visu.cines.fr sur le port 22.

Si vous ne parvenez pas à effectuer une simple connexion ssh sur le nœud (ex: visu1.cines.fr) le problème pourrait provenir d’un filtrage en sortie depuis votre site local. Nous vous conseillons de contacter votre service informatique.

Compute on the machine

The job scheduler is SLURM(Simple Linux Utility for Resource.