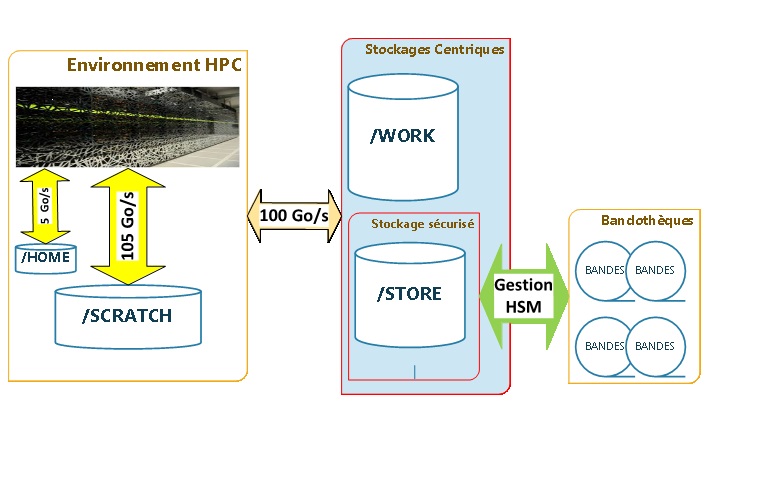

File spaces available at CINES

| Space name | Quota (default) ¹ | Aggregated performance | Usages | Backup |

| HOME | ~100 To | 5 Go/s | Home directories, scripts, executable sources and small files. | yes |

| SCRATCH | ~5 Po | 105 Go/s | Temporary work space : read/write of temporary files and/or large data sets during job processing | no |

| WORK | ~12 Po | 100 go/s | Workspace for the overall environment of the operational management of the project. | no |

| STORE |

Gestion HSM 12 Po extensible |

100 Go/s (Mutualisé avec WORK) | Number of files limited. Large storage space. Securing project data, long-term storage (DMP perspective). | yes |

Disk quotas

Default limits are defined to fit most of the projects needs. CINES keeps listening to user’s specific needs to find the appropriate limits to set for their project.

| Space name | Default quota (1) | Quota target |

| HOME | 100 Go | user |

| 20 000 files | ||

| SCRATCH | 20 To | group |

| 200 000 files | ||

| WORK | 5 To | group |

| 500 000 files | ||

| STORE (2) | 1 To | group |

| 10 000 files |

(¹) Limite “hard”. To ensure stability and performance of file systems, scalable limits if justified

(²) Space being subject to automatic hierarchical management (HSM, on discs and magnetic tapes), access to certain “old” files requires a latency time induced by their repatriation from the magnetic tapes.

Quotas on HOME, SCRATCH and WORK (quota mechanism specific to CINES)

You will be notified by email when approaching and exceeding the quotas defined above. In addition, all pending jobs on Occigen will be blocked until your quotas fall below the authorized threshold.

De plus, l’ensemble des travaux en attente sur Occigenseront bloqués le temps que vos quotas repassent sous le seuil autorisé.

Example :

superman@login0:~$ squeue –u $USER

JOBID PARTITION NAME USER ST TIME NODES NODELIST (REASON)

351007 hsw24 job3 superman PD 0:00 1 (JobHeldAdmin)

Space /store (standard quota mechanism)

The quota for /storeapplies mainly to the number of files (with a default value set at 10,000). Indeed, in order to guarantee effective security of this space, it is imperative to maintain a reasonable number of files by using, when necessary, commands such as “tar”.

An initial quota of 1 TB, scalable on request, is also placed on the data volume. These quota values are assigned to the project (group). If a user exceeds one of these values, all users in the same group will no longer be able to create files in this space.

Spaces /home and /scratch (quota mechanism specific to CINES)

Two limits, “soft” and “hard“, are set up on the volume of data and number of files (see table above)

- When crossing a “soft” limit:

- an alert email is sent, indicating that the project is approaching the value set for the “hard” limit.

- When crossing a “hard” limit: see table above to determine if the user alone or all those in his group are concerned by the following points

- running jobs are not interrupted.

- Submission of new work is prohibited.

- Queued jobs are then blocked.

- An information email is sent informing of the overrun, the consequences as well as the possible actions to return under the limit.

It is possible to identify the jobs blocked by this overrun by their belonging to a BLOCKED_xxx partition (command: squeue $USER).

superman@login0:~$ squeue $USER JOBID PARTITION NAME USER ST TIME NODES NODELIST (REASON) 351007 BLOCKED_H job3 superman PD 0:00 1 (PartitionDown) 351009 BLOCKED_H job4 superman PD 0:00 1 (PartitionDown) 354005 all job2 superman R 8:29:17 1 n2957 354006 all job1 superman R 8:29:17 1 n3004

Consult the status of his occupations

To find out the usage status and limits for each of your file spaces at any time, you can use the project_statecommand.

Sample command output:

Etat des consommations du projet abc1234 sur le cluster OCCIGEN (au 06/10/2017 00:00:00)

-----------------------------------------------------------------

Allocation : 530000 heures

Consommation : 195772 heures soit 36% de votre allocation (sur une base de 12 mois)

-----------------------------------------------------------------

Allocation bonus : 106000 heures

Consommation bonus: 0 heures soit 0% de votre allocation (sur une base de 12 mois)

-----------------------------------------------------------------

Etat des systemes de fichiers de l'utilisateur superman (abc1234) (au 06/10/2017 10:11:53)

--------------------------------------------------------------------------

| Filesystem | Mode | Type | ID | Usage | Limite | Unite |

--------------------------------------------------------------------------

| /home | Space | User | superman | 40.61 | 40.00 | GB | depassement

| |------------------------------------------------------------

| | Files | User | superman | 5211 | 20000 | Files |

--------------------------------------------------------------------------

| /scratch | Space | Group | abc1234 | 760 | 4000 | GB |

| |------------------------------------------------------------

| | Files | Group | abc1234 | 26221 | 200000 | Files |

--------------------------------------------------------------------------

| /store | Space | Group | abc1234 |D 308 | 1907 | GB |

| |------------------------------------------------------------

| | Space | Group | abc1234 |D+T 2232 | * | GB | Fri Oct 6 02:00:13 CEST 2017

| |------------------------------------------------------------

| | Files | Group | abc1234 | 29712 | 30000 | Files |

--------------------------------------------------------------------------

Managing spaces in Luster:

Occigen uses a Luster-like filesystem for its $SCRATCH. In order to improve file access performance, it is possible to use “striping”. This technique of “striping” files allows for improved performance of serial I/O codes from a single node or parallel I/O from multiple nodes writing to a single shared file as with MPI-IO, parallel HDF5 or parallel NetCDF.

The Luster file system consists of a set of OSS servers (Object Storage Servers) and I/O disks called OSTs (Object Storage Targets).

A file is “stripped” when read and write operations simultaneously access multiple OSTs. File striping is a way to increase I/O performance since write or read access to multiple OSTs increases the available I/O bandwidth.

There are a few commands to take advantage of this efficient way of accessing data:

lfs getstripe <nom de fichier/répertoire>

lfs setstripe -c <nombre de "stripes"> <nom de fichier/répertoire>

Good practices

In order to best manage the disk space allocated to you, we recommend that you perform some simple actions:

-

-

- delete unnecessary files(/home, /scratch, /store);

- the /scratch workspace being temporary, move the files to be kept and/or no longer necessary for the calculations to one of the other spaces (/home or /store);

- compact your account trees (tar,…), these files can be placed on the /store (required by the underlying HSMmechanisms);

- repatriate your files to a remote site (e.g. your laboratory) (scp,…);

- The /store space is not intended for the calculation phases (batchjobs), it should not be used for that. For more information see the following pageor contact the SVP service:

WARNING! Access (tar, scp, sftp, cat, edit, , . . .) to old files on /store may be slowas it may require “re-hydrating” data from tapes

File restoration

on /store

Files placed on this space are automatically duplicated on the “tape libraries” once a day. Therefore, in the event of loss or accidental deletion of these files, they can be recovered if the recovery request is made within 10 days of the incident.

For this, contact the SVP service:

on /home

Files placed on these spaces are automatically duplicated once a day. Therefore, in the event of loss or accidental deletion of these files, they can be recovered if the recovery request is made within 10 days of the incident.

For this, contact the SVP service:

-